April 2025

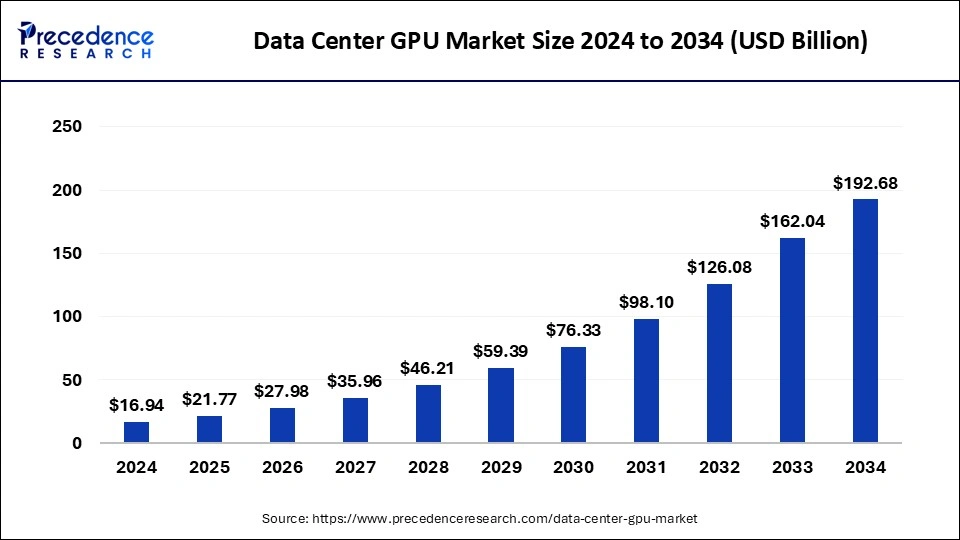

The global data center GPU market size is calculated at USD 21.77 billion in 2025 and is forecasted to reach around USD 192.68 billion by 2034, accelerating at a CAGR of 27.52% from 2025 to 2034. The North America data center GPU market size surpassed USD 6.44 billion in 2024 and is expanding at a CAGR of 27.56% during the forecast period. The market sizing and forecasts are revenue-based (USD Million/Billion), with 2024 as the base year.

The global data center GPU market size was estimated at USD 16.94 billion in 2024 and is predicted to increase from USD 21.77 billion in 2025 to approximately USD 192.68 billion by 2034, expanding at a CAGR of 27.52% from 2025 to 2034. Increasing demand for artificial intelligence and growing PC gaming and gaming console industries are the key drivers for the growth of the data center GPU market.

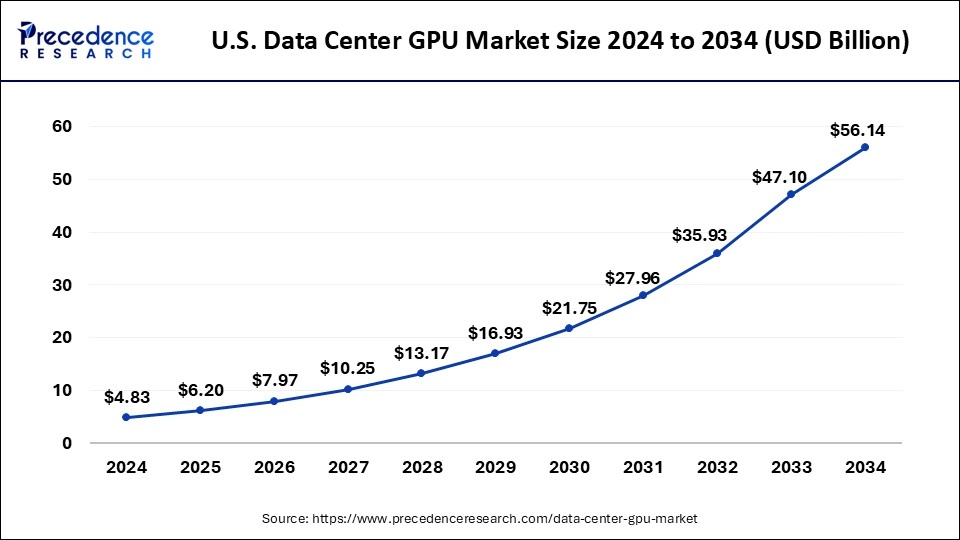

The U.S. data center GPU market size reached USD 4.83 billion in 2024 and is predicted to attain around USD 56.14 billion by 2034, at a CAGR of 27.80% from 2025 to 2034.

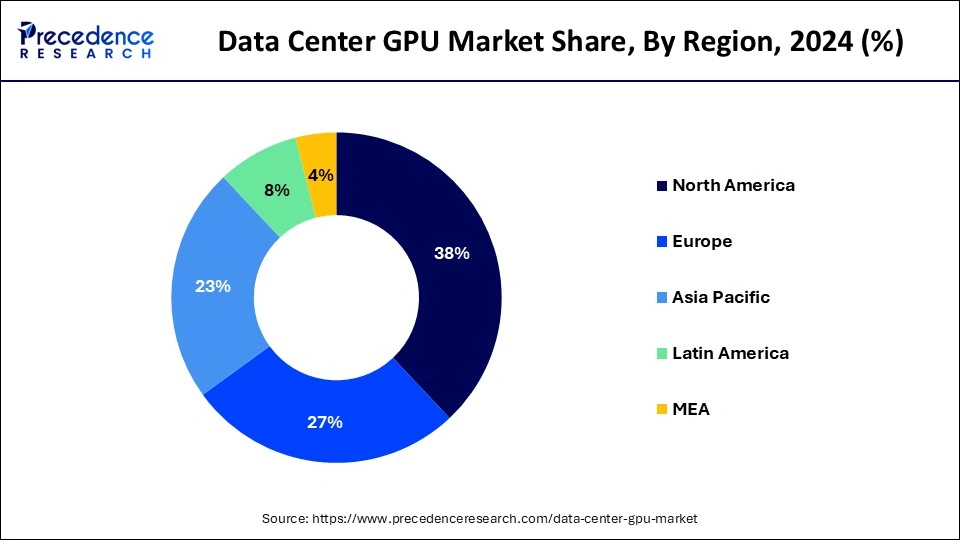

North America dominated the data center GPU market in 2024. The data center GPU market in America is thriving due to technological advancements and advanced research models based on high-end GPUs. Key factors influencing this market include high-tech industries, extensive research and development efforts, and rising investments in artificial intelligence and machine learning applications. The growth is particularly driven by the growing adoption of cloud services by businesses across various sectors, especially in the United States and Canada.

Asia Pacific is expected to witness the fastest growth in the data center GPU market over the forecast period. The data center GPU market in the region is mainly driven by the rapidly expanding IT and telecommunications sector, the growing eCommerce industry, and an increased focus on AI and ML applications. Furthermore, advancements in data center GPUs are expected to propel the global market growth. The collaboration of these factors contributes significantly to the rising demand for data center GPUs. The increasing adoption of cloud computing by businesses in the Asia Pacific region will further boost the demand for data center GPUs in this area.

Graphic processing units (GPUs) are widely used in data centers due to their powerful parallel processing capabilities, making them ideal for applications like scientific calculations, machine learning, and big data processing. Unlike central processing units (CPUs), GPUs perform more complex calculations by utilizing parallel processing, where multiple processors handle the same task simultaneously. Each GPU has its own memory to store the data it processes.

A crucial aspect of AI and machine learning (ML) is the training of advanced neural networks, which is significantly accelerated by GPU servers. Companies in the data center GPU market have seen increased demand for their GPU products, such as the Nvidia A100 Tensor Core GPU, specifically designed for AI tasks. The global data center GPU market is expanding as various industries, including healthcare, finance, and autonomous vehicles, adopt GPU servers to manage large datasets and improve the accuracy of AI models.

| Report Coverage | Details |

| Market Size by 2034 | USD 192.68 Billion |

| Market Size in 2025 | USD 21.77 Billion |

| Growth Rate from 2025 to 2034 | CAGR of 27.52% |

| Largest Market | North America |

| Base Year | 2024 |

| Forecast Period | 2025 to 2034 |

| Segments Covered | Deployment Model, Function, End-user, and Regions |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Rising adoption of multi-cloud strategies

The increasing adoption of multi-cloud strategies and network upgrades to support 5G is significantly driving the data center GPU market growth. Multi-cloud involves using two or more cloud computing services to deploy specific application services. Businesses are adopting multi-cloud architectures to prevent data loss or downtime from localized failures, ensure compliance with security regulations, and meet workload requirements. This approach also helps avoid dependence on a single cloud service provider. Moreover, Investments in communication network infrastructure are rising to support the transition from 3G and 4G to 5G.

Economical barriers

The economies of scale achieved by GPU manufacturers create a substantial barrier for data center GPU server manufacturers considering backward integration. A company attempting to produce its GPUs would struggle to match these economies of scale. This challenge affects the company's ability to remain competitive, invest in research and development, and offer competitive pricing. Data center GPU server manufacturers may find it difficult to attain similar economies of scale, which can limit their effectiveness in the highly competitive data center GPU market.

Advancements in server technology

Advancements in server technology to support machine learning (ML) and deep learning (DL) are emerging trends that are driving market growth. Data is crucial for an enterprise's decision-making process, and success depends on effective data analysis. Companies are increasingly using advanced technologies like big data, ML, and DL to analyze large datasets. To meet the computing demands of high-performance computing (HPC) workloads, data center GPU market companies are introducing servers with field-programmable gate arrays (FPGAs), application-specific integrated circuits (ASICs), and GPUs.

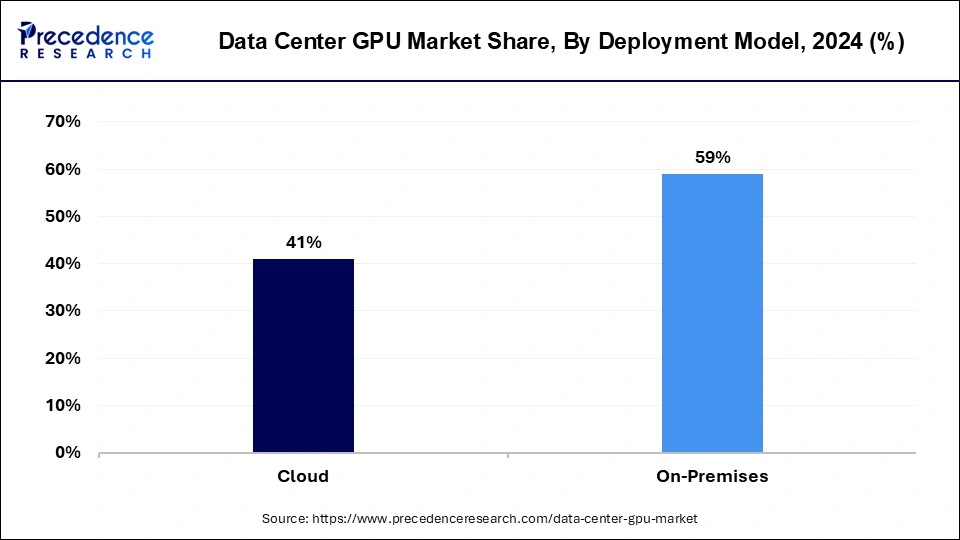

The on-premises segment dominated the data center GPU market in 2024. On-premises GPUs give greater control over data and systems. Organizations using on-premises deployment manage their software and hardware independently rather than relying on service providers. This approach ensures higher levels of security and data privacy, as data remains within the organization’s premises. Although on-premises solutions require significant upfront investment, they provide control over long-term costs through ownership.

The cloud segment is projected to grow at the fastest rate in the data center GPU market over the forecast period. Improved offerings like AI-as-a-Service and machine learning require powerful data processing capabilities. Enterprises, a major user segment, are implementing data center GPUs for their in-house data centers and private clouds. These GPUs enable businesses to handle computational and architectural workloads more efficiently.

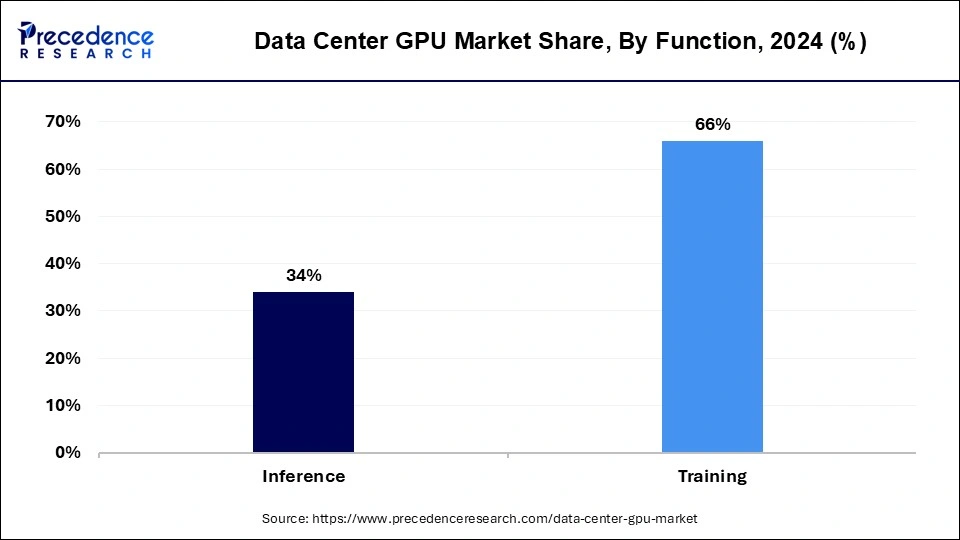

The training segment dominated the data center GPU market in 2024. Inference is the process of using a trained machine-learning model to predict outcomes for new, unseen data. It is a computationally intensive task that requires high throughput and minimal delay. On the other hand, training involves using large datasets to teach and optimize a machine-learning model. This process demands GPUs with strong computational capabilities and high memory bandwidth, which can lead to segment growth.

The inference segment is projected to gain a significant share of the data center GPU market in the upcoming years. Inference involves employing a trained machine learning model to forecast outcomes for new, unseen data. It is an intensive computing task that requires high data processing speed and minimal delay. This function is essential for applications such as speech recognition, video analysis, and recommendation systems.

By Deployment Model

By Function

By End-user

By Geography

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

April 2025

January 2025

March 2025

January 2025