January 2025

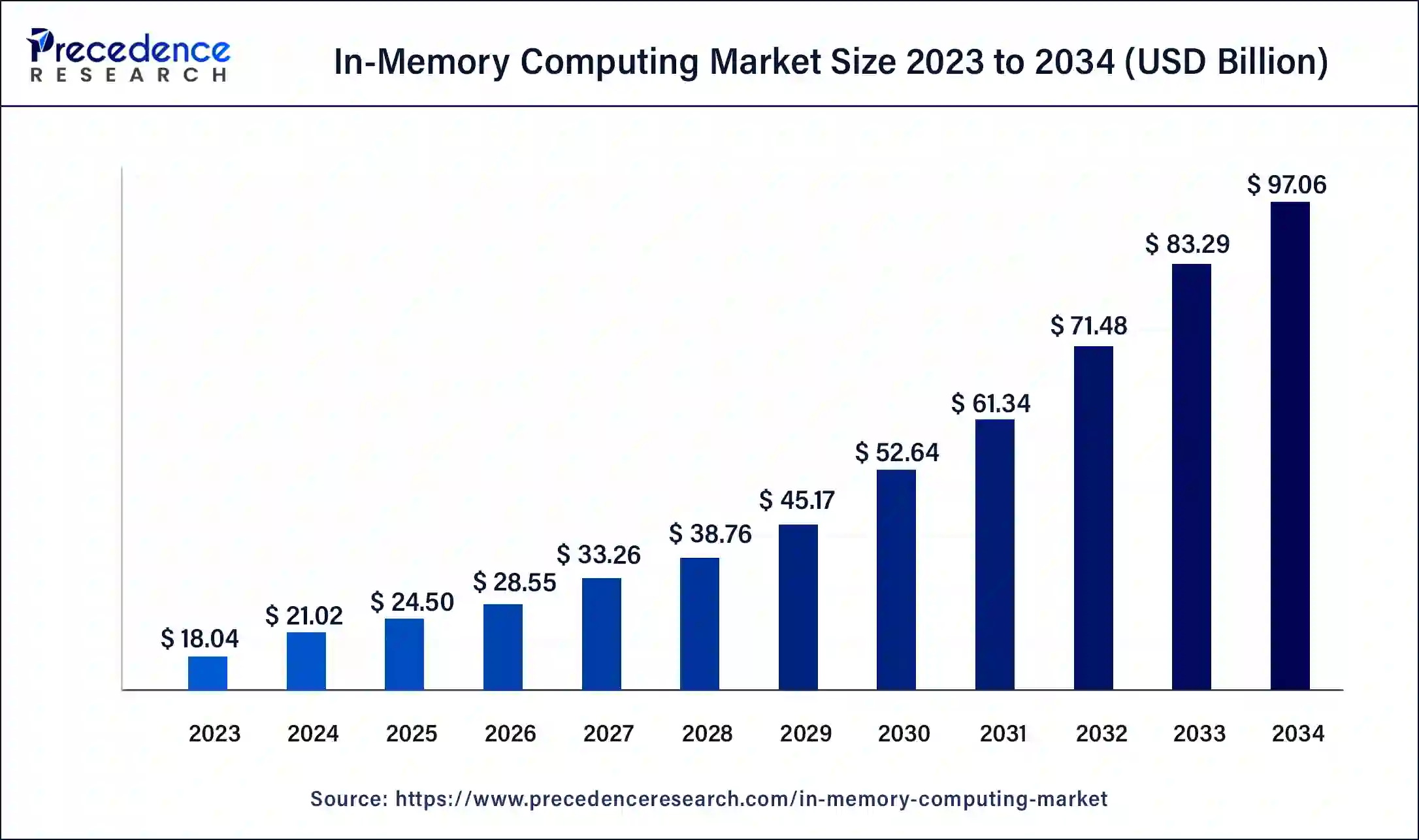

The global in-memory computing market size was USD 18.04 billion in 2023, calculated at USD 21.02 billion in 2024 and is expected to be worth around USD 97.06 billion by 2034. The market is slated to expand at 16.53% CAGR from 2024 to 2034.

The global in-memory computing market size is projected to be worth around USD 97.06 billion by 2034 from USD 21.02 billion in 2024, at a CAGR of 16.53% from 2024 to 2034. The exponential pace of data generation, widespread adoption of AI, and technological innovations in the sector are driving growth in the in-memory computing market.

In-memory computing refers to the process of performing computational tasks entirely in the computer’s memory (e.g., RAM), which eliminates the need to transfer data back and forth between the system’s processing and memory units, substantially reducing latency. This addresses one of the most prominent obstacles in modern computing: the time and energy costs associated with data movement. Compared to traditional data storage options, the difference is stark, with in-memory storage outperforming spinning disk drives (HDD) significantly. There is approximately a 100,000 times difference in latency and a 200 times difference in bandwidth.

In-memory computing has a variety of applications, such as scientific computing, machine learning, database query, and baking risk assessment. It is generally used for calculations in large-scale, complex systems, like weather prediction models and Monte Carlo simulations. In-memory computing offers higher scalability and performance compared to traditional post-processing.

In-memory computing is used in genomics, signal processing, recommendation systems, and data analytics. The extensive use of deep neural networks and the generation of large quantities of data have led to growing demand in the in-memory computing market. However, a lack of trained professionals and an absence of proven architectural patterns is hindering growth in the market.

The advent of AI Technology in the In-Memory Computing Market

The permeation of artificial intelligence based technologies, such as deep neural networks and machine learning in several industries, is driving growth in the in-memory computing market. AI data processing needs parallel and simultaneous computation of large data sets, bringing up the need to minimize data bottleneck issues. Deep neural networks have become commonplace due to their applications in technologies such as autonomous vehicles, speech and image recognition, and natural language processing.

| Report Coverage | Details |

| Market Size by 2034 | USD 97.06 Billion |

| Market Size in 2023 | USD 18.04 Billion |

| Market Size in 2024 | USD 21.02 Billion |

| Market Growth Rate from 2024 to 2034 | CAGR of 16.53% |

| Largest Market | North America |

| Base Year | 2023 |

| Forecast Period | 2024 to 2034 |

| Segments Covered | Component, Deployment Method, Organization Size, Vertical, Applications, and Regions |

| Regions Covered | North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa |

Applications in big data

Big data refers to large and complex datasets that pose considerable challenges to traditional data management and analytics tools to handle in a feasible timeframe. Big data is characterized by high-speed (velocity) or high-volume, high-assortment (variety) data. With groundbreaking advancements in information science, information mining techniques, and AI-based technology such as Internet of Things sensors, edge computing, and connected devices, organizations are generating colossal amounts of data, which include performance metrics, sensor readings, and more.

The generation of data demands sophisticated information processing and handling technologies to convert it into actionable insights and business intelligence through advanced analytics techniques, such as pattern recognition, statistical modeling, machine learning, and predictive forecasting.

A lack of trained professionals

A growing global labor shortage has led to increasing job vacancy rates in the past decade. This is hampering the growth of the in-memory computing market. After the loosening of pandemic restrictions, the demand for goods and services has picked up, but the supply of workers has not gone up in the same measure, leading to a shortage of labor in developed nations such as the United States, United Kingdom, and across the European Union.

The labor shortage is even more acute in the technology sector, where there is a growing demand for tech talent across industries, leading to a highly competitive marketplace for skilled professionals. A recent survey conducted by Indeed found that 70% of technical workers had multiple job offers when accepting their most recent job. However, the permeation of AI across industries has accelerated the progression of technology significantly, leading to tech skills becoming outdated quicker than before.

Tech workers continue to be some of the most in-demand talent in the labor market today despite reports about tech layoffs dominating the news for the better part of the year. A recent survey conducted by Indeed to better understand tech worker behavior suggests that tech workers aren’t being affected by recent economic turbulence quite as much as some might think and some might even argue that tech workers are benefiting from tech layoffs.

Rising DRAM costs

High demand for dynamic random access memory chips is being driven by aggressive demand among Chinese cloud service providers and an overall rise in demand for the in-memory computing market products. For example, Samsung Electronics reported an increase in the average selling price of DRAMs by 17-19%, with sales rising by 22% to USD 9.22 billion. As a result, Samsung Electronics and SK Hynix have increased production at their largest DRAM chip manufacturing plants in Pyeongtaek and Wuxi.

Demand for real-time business intelligence and low-latency time for finance applications

Banks have begun partnering with fintech companies to roll out open banking initiatives (Payment Services Directive), which entails making their payment services and customer accounts back-end systems available to other parties in the financial payment ecosystem. Payment processing systems rely on speedy payments while maintaining impermeable security. For service providers to be able to handle massive volumes of complicated transactions, traditional disk-based databases seem obsolete. Banks and credit, debit, and prepaid card providers are increasingly looking to integrate in-memory processing frameworks, which are significantly faster than both HDD and SSD data storage systems. Thus driving the in-memory computing market.

The complexity of data handled by open banking also necessitates that consumer information be accessible in real-time for processing payments. Keeping all the information in an in-memory database means all relevant information for transaction processing will now be almost instantly. Integration of cloud-based storage with in-memory computing means the storage capacity is functionally unlimited. As a nascent market, the open banking and on-demand fintech service market provides a huge opportunity for the players in the in-memory computing market.

The solutions segment dominated the in-memory computing market in 2023. In-memory computing solutions comprise of in-memory databases, online analytical processing, online transaction processing, in-memory data grid, and data streaming. In-memory databases are used for real-time analytics and decision-making by enterprises for tasks such as fraud detection, stock trading, and ad targeting.

They are also used for caching, session storage, handling queues where rapid operations are required, and in gaming, where databases track millions of concurrent game states. Online analytical processing is used for data mining and business intelligence operations, as well as conducting complex analytical calculations. Online transaction processing is widely used in the fintech industry, while in-memory grids are used for large-scale, parallelized data processing applications.

The services segment is set to grow at the fastest rate in the in-memory computing market during the forecast period. In-memory computing coupled with cloud computing is used to accelerate the process of system implementation. Several consulting organizations use in-memory computing to boost affordability, scalability, and achieve the required levels of performance.

The cloud segment held a significant share of the in-memory computing market in 2023. In-memory-based cloud computing has several advantages for secure data handling such as the use of granular permissions and federated roles. The use of cloud-based infrastructure reduces equipment and maintenance costs. The integration of cloud-based storage with in-memory computing gives businesses almost unlimited storage capacity.

The on-premises segment is projected to witness significant growth in the in-memory computing market during the studied years. On-premise computing has the advantages of added security, and cost-effectiveness, and can operate without access to the internet. On-premise computing also offers the advantage of flexibility and customizability compared to cloud-based computing.

The large enterprises segment dominated the in-memory computing market in 2023. Larger enterprises usually have requirements for handling larger data. Large amounts of raw data need robust data management systems to handle and find meaningful patterns, trends, and insights. Using in-memory processing also runs up costs. Larger enterprises have enough resources to implement state-of-the-art technologies like in-memory processing.

The SME segment is expected to expand rapidly in the in-memory computing market during the forecast period. There is rising awareness among smaller enterprises about the benefits of in-memory processing, including incredibly high speeds, and removing bottlenecks in disk-based processing. Along with better processing speed comes higher storage capacity and better transfer speed. This is leading SMEs to increasingly adopt the use of in-memory computing.

The BFSI segment dominated the in-memory computing market in 2023. Financial services are increasingly demanding real-time risk analysis, fraud detection, and high-frequency trading. In-memory computing is also used to increase the speed of underwriting processes.

The retail & e-commerce segment is expected to see notable growth in the in-memory computing market during the forecast period. In-memory computing is used in retail and e-commerce to personalize marketing, dynamic pricing, and inventory management for higher sales, real-time optimized supply chains, and overall better customer experience.

The risk management & fraud detection segment dominated the in-memory computing market in 2023. In-memory computing speeds up processes such as machine learning used in risk management practices in the finance sector. In-memory computing helps to consolidate risk calculations and provide real-time analytics for organizations. In-memory processing helps tackle issues such as the high cost of information access, heavy loads on source systems, and limited source API. In-memory computing also helps enhance digital integration hubs, optimize portfolio management, and speed up post-trade analysis.

The other segment is set to see notable growth in the in-memory computing market over the forecast period. Image processing, route optimization, claim processing, and modeling benefit from the use of emerging in-memory computing technology. These algorithms help achieve better latency and throughput, reducing the area cost and making it feasible to implement inside size-limited memory arrays. More complex in-memory operations, such as image convolution, can also help maximize parallelism.

North America dominated the in-memory computing market in 2023. Ongoing research and development efforts in the region, advances and widespread adoption of artificial intelligence, and the presence of a robust IT infrastructure in the region have led to high demand in the in-memory market. The presence of several AI firms in the region has also led to significant demand.

Asia Pacific is expected to host the fastest-growing in-memory computing market during the forecast period between 2024 and 2033. Among companies in the in-memory computing space, Samsung Electronics is one of the largest patent filers. The company’s patent is aimed at a neural network processor with memory containing computer-readable instructions and kernel intermediate data. This data includes multiple calculated values derived from weight values within kernel data. Rapid rates of urbanization and changing consumer demographics have led to significant growth in the e-commerce sector. Other top companies in the in-memory industry in the space include Semiconductor Energy Laboratory, TDK, and Shanghai Cambricon Information Technology.

Segments Covered in the Report

By Component

By Deployment Method

By Organization Size

By Vertical

By Applications

By Geography

For inquiries regarding discounts, bulk purchases, or customization requests, please contact us at sales@precedenceresearch.com

No cookie-cutter, only authentic analysis – take the 1st step to become a Precedence Research client

January 2025

August 2024

January 2025

August 2024